Autonomous agents ai are goal-driven AI systems that plan, act, and adapt to complete tasks with minimal human input; inventory your automations, pilot hybrid agents, add monitoring and safety gates, and iterate with human oversight to reduce risk and ship value fast.

Introduction

autonomous agents ai are the focus of this guide, and this post is informational with hands-on, practical guidance so you can decide whether to build, buy, or pilot agents in your stack. You’ll get clear definitions, an implementation workflow, code you can copy, recommended tools, and a compliance checklist that helps fix low CTR titles and meta by providing shareable, authoritative content. I’ll reference OpenAI’s agent playbook and industry reporting to ground best practices and risk signals. OpenAI Platform

In my experience small, iterative pilots win — start with a single use case that saves measurable time, instrument everything, and require human approval for high-risk steps. This approach reduces false starts and gives you data to improve both UX and SEO.

What autonomous agents ai are, and why they matter

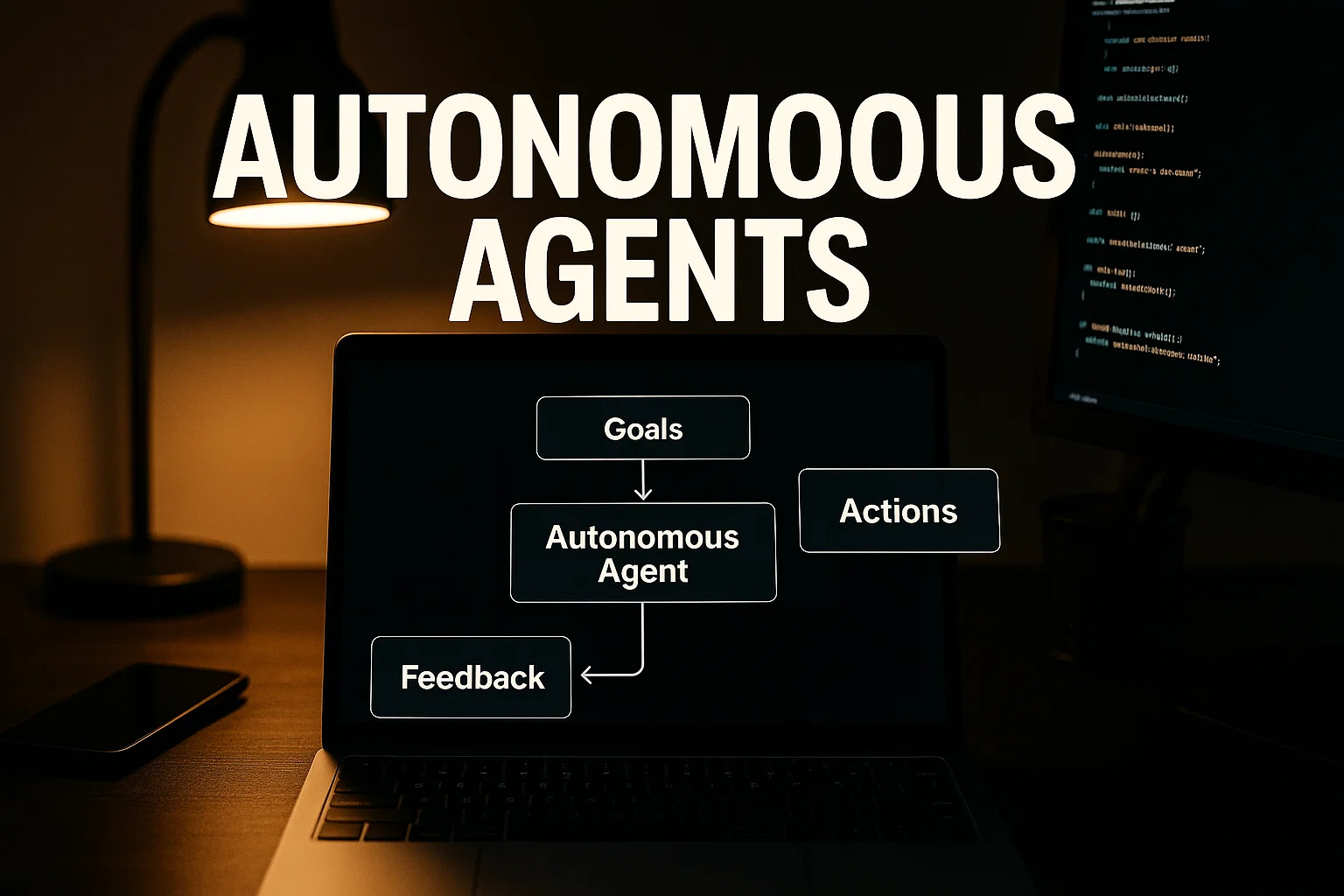

Autonomous agents ai are systems that take goals, plan multi-step actions, call tools or APIs, and adapt based on results. They differ from single-response models because they hold state, choose next steps, and can loop until a goal is met. This makes them ideal for research automation, multi-step customer support, content workflows, or data collection tasks. OpenAI Platform

How agents work at a glance

- Goal input: a high-level objective from a user or system.

- Planning: the agent decomposes goals into steps and selects tools.

- Execution: calls APIs, runs queries, or performs side effects.

- Feedback loop: validates results, updates state, and re-plans if needed.

Why they matter for teams

Agents can reduce repetitive work, speed up knowledge tasks, and chain web, internal, and AI tools to deliver outcomes instead of raw responses. That said, they increase operational risk if unchecked, so governance and monitoring are essential. Recent industry analysis highlights both the productivity upside and the need for governance when agents act autonomously. Reuters

Define a practical agent strategy

Before coding, pick a clear scope and constraints. Treat agents like product features, not experiments.

Start with a use-case ledger

List candidate tasks, estimate human time saved, and classify impact and risk. Prioritize tasks that are repetitive, rules-light, and yield measurable outcomes when automated.

Design constraints and human gates

Decide permissible actions, required approvals for risky steps, and the set of tools the agent can call. This reduces blast radius and makes audits simpler.

Safety and traceability

Log prompts, model versions, tool calls, and decision paths. Make audit logs immutable and searchable so you can reproduce and debug agent decisions later.

How to build an autonomous agent — step-by-step

Use this hands-on workflow to get an agent from idea to controlled production.

- Pick a single, high-value task. Choose something scoped and measurable, for example “summarize weekly support tickets and draft triage actions.”

- Design the state and tools. Define a state schema, allowed tools (search, email, database), and inputs/outputs.

- Write structured prompts and guardrails. Include constraints, success criteria, and safety checks in the instructions.

- Prototype in sandbox. Run the agent with fake or limited data, capture logs, and iterate on failures.

- Add monitoring and human-in-the-loop. Require human approval for actions that cost money, publish externally, or affect safety.

- Pilot with limited users, measure, repeat. Track completion rate, time saved, human overrides, and error types.

Minimal Python agent loop (copy-paste)

# python

# Tiny agent loop skeleton, replace model and tool calls with real clients

import time

def call_model(prompt):

# Placeholder: replace with actual LLM client call

return {"plan": ["search", "summarize"], "notes": "ok"}

def call_search(query):

# Replace with real search or API call

return ["result1", "result2"]

def run_agent(goal):

try:

# Get a plan from model

resp = call_model(f"Goal: {goal}\nReturn steps as JSON.")

plan = resp.get("plan", [])

results = []

for step in plan:

if step == "search":

results += call_search(goal)

# Add other tool handlers here

time.sleep(0.1)

# Finalize with a model call to synthesize results

final = call_model(f"Synthesize: {results}")

return final.get("notes", "no output")

except Exception as e:

# Minimal error handling, log and surface

raise RuntimeError("Agent failed") from e

# Explanation: this skeleton shows the loop: plan, execute, synthesize.

Use this pattern as a test harness to validate the agent's behaviors before adding production tool access.

Best practices, tools, and resources

Adopt clear design patterns and pick tools that support auditability and orchestration.

Best practices

- Limit tool scope to reduce unintended effects.

- Require explicit human approval for critical actions like payments or public posts.

- Use versioned prompts and model pins so behavior is reproducible.

Recommended tools

- OpenAI agents guide and primitives — Pros: established design patterns and primitives, Cons: provider lock-in risk; Install tip: follow the building-agents learning track and test with sandbox keys. OpenAI Platform

- LangChain or orchestration libraries — Pros: composable tools, community patterns, Cons: dependency surface; Install tip: install via package manager and run sample chains locally.

- Observability and workflow engine (e.g., Prefect) — Pros: retries, alerts, audit trails, Cons: extra infra; Install tip: start with a hosted trial and wire a simple flow before production.

Short pro/con notes: orchestration simplifies logic but adds operational overhead, retrievers improve grounding but require data hygiene.

Challenges, legal and ethical considerations, troubleshooting

Agents raise unique risks: actions, rights, and alignment.

Common failure modes

- Goal drift: agents optimize for a mis-specified reward or goal.

- Tool misuse: malformed calls or unexpected side effects from integrated tools.

- Data leakage: agents expose sensitive data if not sandboxed.

Compliance checklist

- Maintain an inventory of agents and their tool permissions.

- Log prompts, model versions, and all external calls.

- Require human approvals based on risk tiers.

- Implement rate limiting, retry limits, and emergency stop controls.

- Document consent and data handling for user-facing agents.

Alternatives and mitigations

If risk is high, prefer human-assisted automation, scheduled batch jobs, or narrow rule-based assistants until the agent design stabilizes.

Industry reporting warns that agents increase both capabilities and risks, recommending governance, monitoring, and legal clarity. (Reuters). Reuters

Practical guides from platform vendors recommend incremental, sandboxed development and explicit human gates for early deployments. (OpenAI). OpenAI Platform

Measuring success and improving CTR with agent content

To fix on-page audit issues like low CTR, document your agent program publicly and create bite-sized, benefit-led titles and meta descriptions for pages describing agent features. Test titles and meta with A/B experiments and surface measurable benefits, for example time saved per task.

Use Google’s people-first content guidance to keep the copy helpful and credible, and show E-E-A-T signals by including first-person experience and author credentials. Google for Developers

Implementation tips for engineering teams

- Version prompts and store them in the repo.

- Attach an immutable log to each run for replay and auditing.

- Use hybrid architected KEMs for encryption of sensitive payloads between agent runs if passing secrets.

- Automate safety smoke tests into CI so deployments block on policy failures.

Conclusion and CTA

Autonomous agents ai can dramatically cut work and unlock new product capabilities, but they need careful design, monitoring, and staged rollout. Start with a measurable pilot, enforce human gates for high-risk actions, and instrument every run for traceability. If you want templates, CI checks, or CMS-ready writeups that fix low CTR and improve trust signals, Alamcer builds practical guides, automation templates, and custom development to help teams deploy agents safely and quickly.

Welcome to Alamcer, a tech-focused platform created to share practical knowledge, free resources, and bot templates. Our goal is to make technology simple, accessible, and useful for everyone. We provide free knowledge articles and guides in technology, offer ready-to-use bot templates for automation and productivity, and deliver insights to help developers, freelancers, and businesses. For custom agent development, governance tooling, or content templates, contact Alamcer to accelerate your rollout.

Key takeaways:

- Start small with a measurable pilot.

- Log prompts, model versions, and tool calls for audit.

- Use human approvals for high-risk actions.

- Iterate fast and measure time saved and error rates.

FAQs

What is autonomous agents ai?

Autonomous agents ai are goal-driven systems that plan and execute multi-step tasks across tools and services with minimal human input, using models, planners, and feedback loops to reach objectives.

When should I use an autonomous agent instead of a script?

Use agents for tasks needing flexible planning, multi-step decision making, or tool orchestration. Use scripts for deterministic, repeatable tasks where no planning is required.

How do I keep agents from making harmful actions?

Limit tool permissions, add human approval gates, require explicit checks for risky operations, and monitor run outputs in real time.

Which metrics show an agent is successful?

Track completion rate, time saved per task, human override frequency, failure modes, and the business outcome tied to the task.

How do I debug a failed agent run?

Replay the immutable logs, reproduce the prompt and state in sandbox, and step through each tool call to isolate the failing component.

autonomous agents ai

Autonomous agents ai are systems that plan, act, and adapt to complete goals by combining models, tools, and feedback, designed for multi-step automation and decision workflows.

Are autonomous agents regulated or subject to legal risk?

Yes, depending on actions they take — contracts, financial transactions, or personal data handling create legal exposure. Involve legal and compliance teams for public or high-risk deployments.

Can I run agents offline or on low-power devices?

Lightweight agents can run on constrained devices for specific tasks, but many agent designs assume cloud-backed tools and models for richer capabilities.

How do I train an agent to be reliable?

Improve prompt engineering, add validation steps, use structured state, and run large-scale test suites to uncover edge cases before production deployment.

Who should I contact for help building agents?

Contact Alamcer for consulting, templates, and engineering services to prototype, test, and deploy agentic systems with governance and monitoring baked in.

Compliance and disclaimer

This guide is informational and not legal advice. Follow privacy policies, terms of service, and applicable laws such as data protection rules, and consult a professional for legal or compliance decisions.