When people search for “ai automation tools without restrictions”, what they usually mean is: “I want AI automation tools that give me flexibility — self-hosting, extensible APIs, permissive licenses, and minimal vendor lock-in.” That’s a reasonable goal. Who wouldn’t prefer tools they can run on their infrastructure, integrate deeply, and tweak to their needs — without surprise fees or black-box limits?

But there’s an important caveat: “without restrictions” must not mean “without responsibility.” Legal, ethical, and safety constraints exist for good reasons. The sweet spot is tools and architectures that give you operational freedom while keeping you compliant and secure.

In this post I’ll show you how to find and architect flexible AI automation stacks that feel unrestricted in practice: open-source foundations, self-hostable ML infra, no-code builders with export features, developer-first APIs, and governance practices that let you move fast without getting burned. I’ll also cover tradeoffs, pricing realities, and a pragmatic migration plan.

“Freedom in tooling works best when paired with a governance plan. Build the guardrails before you go fast.”

— practical rule

Why “no restrictions” is often a code phrase

People want fewer restrictions for several reasons:

- Avoid vendor lock-in (easy to switch providers or host yourself).

- Control costs and data residency (run on-prem or in your cloud).

- Customize behavior (extend or fine-tune models).

- Meet compliance and privacy rules (keep data in-region).

- Ship products faster without API quotas or rigid UI flows.

What they don’t mean — and shouldn’t mean — is “circumvent safety or licensing.” That’s where freedom becomes liability.

Four practical paths to flexible AI automation

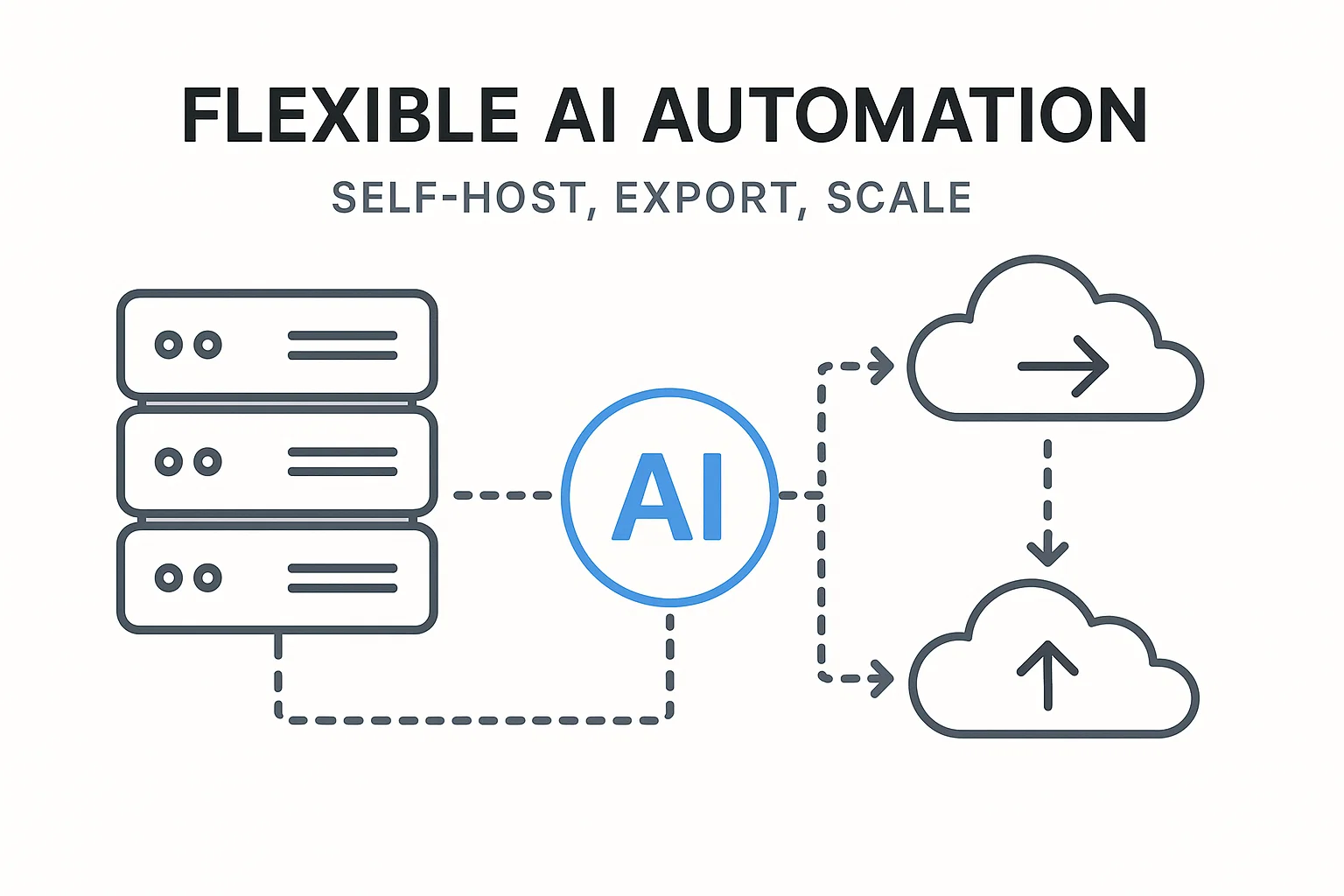

1) Self-hosted open-source stacks (max control)

If you want the fewest platform-imposed constraints, self-hosting is the cleanest route. Open-source tools let you run inference, orchestration, and data pipelines inside your VPC.

What to expect

- Full control over compute, networking, and data retention.

- Ability to tweak models, infra, and connectors.

- No per-request vendor fees (you pay for infra).

- More DevOps and security work — you’re responsible for updates and hardening.

Typical building blocks

- Model serving: TorchServe, Triton, or open-source inference servers.

- Orchestration: Prefect, Apache Airflow, or a lighter job scheduler.

- Automation logic: LangChain-like frameworks or custom microservices.

- Data connectors: Airbyte, custom ETL scripts, or Postgres + Redis for state.

“Self-hosting gives you 'no restrictions' in practice — at the cost of ops responsibility.”

2) Hybrid: managed infra + exportable logic (best balance)

Not every team can run full ML infra. Hybrid platforms let you prototype in the cloud, then export the workflow or code to self-host.

Why choose hybrid

- Quick iteration on managed infra, then export for compliance or scale.

- Use vendor features (fine-tuning UI, telemetry) when helpful — but keep a migration path.

- Good for teams that need speed now and control later.

What to look for

- Platforms with export features (workflow code, Docker images, or Terraform).

- Tools that allow private model uploads and runtime control.

- Clear licensing and model provenance.

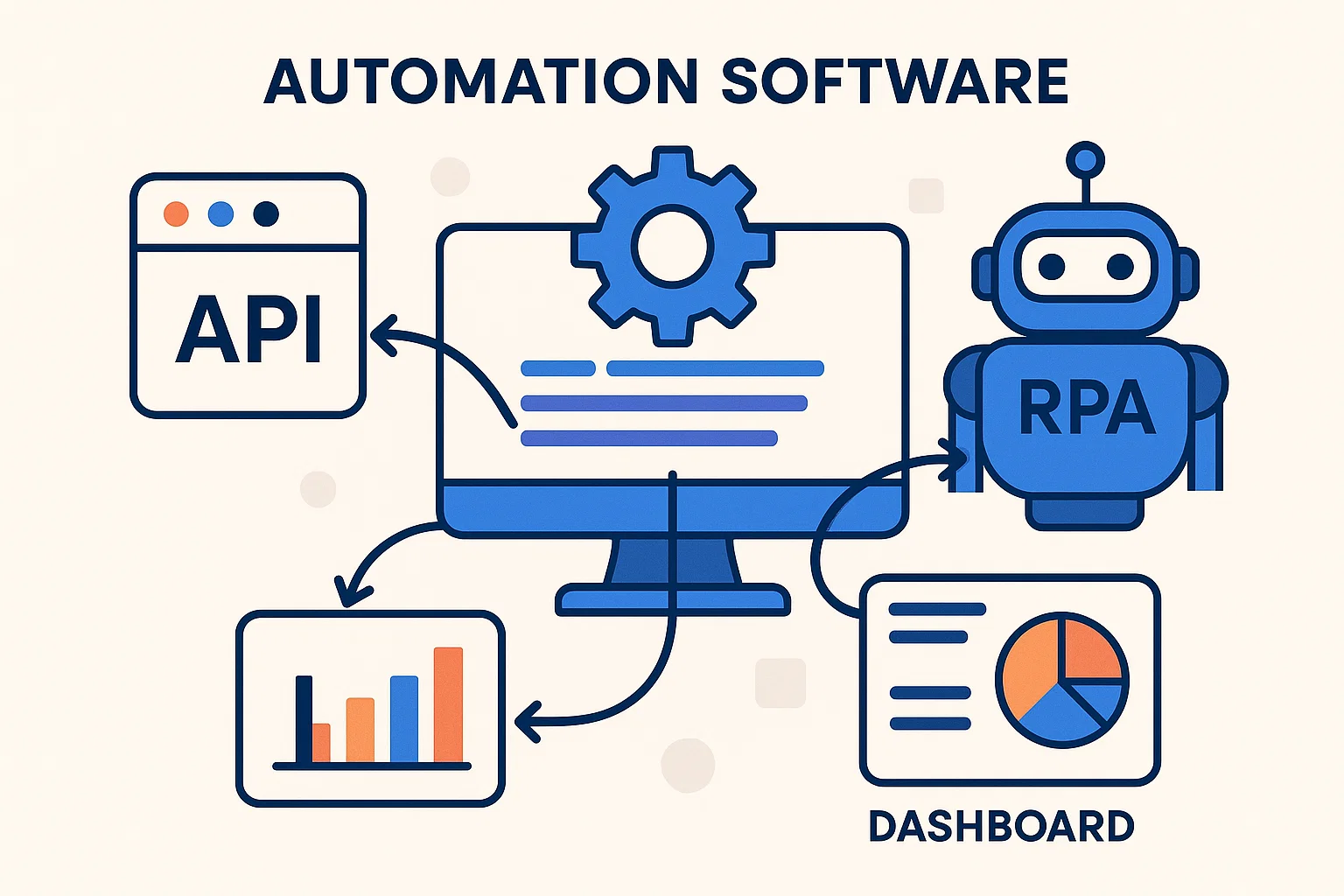

3) Developer-first APIs with permissive terms

Some API-first vendors are notably permissive on usage and provide generous quotas for prototyping. Look for clear SLAs, transparent pricing, and SDKs that let you wrap the API into your microservices.

How this helps

- Fast integration into existing apps and automation pipelines.

- Good developer ergonomics: SDKs, webhooks, and examples.

- Reasonable for production if your provider’s pricing and compliance match your needs.

Key features to prioritize

- Webhook and async job patterns (so you can scale).

- Fine-grained rate limits, custom plans, and enterprise contracts.

- Clear data retention / training policies.

4) No-code / low-code automation that exports logic

If you need non-developers to build workflows, pick no-code tools that support code export or open connectors. This avoids being trapped in proprietary visual flows.

What to require

- Export to code (Python, JSON, or Terraform) or a self-hostable runtime.

- Versioning and team access controls.

- Plugin or webhook support so devs can extend flows.

Good for

- Marketing ops, support teams, and internal workflows with frequent changes.

How to evaluate “flexible” AI automation tools — checklist

- Self-hosting option: Can you run it on your infra or export to run elsewhere?

- Open formats: Does it use standard model formats (ONNX, TorchScript)?

- API ergonomics: SDKs, async jobs, webhooks, language support.

- Licensing clarity: Commercial rights, model training usage, and redistribution rules.

- Data handling: Where is data stored? How long? Is there an option to opt out of training?

- Scaling model: Can you shard/parallelize or use GPU pools?

- Integrations: Connectors for databases, message queues, and orchestration tools.

- Observability: Logs, tracing, metrics, and replay for automated flows.

- Security: Secrets, token rotation, private networking, and audit logs.

- Governance hooks: Approval gates, human-in-loop toggles, and policy checks.

Model & license considerations (you can’t skip this)

Open-source models vary in license and usage terms. If you plan to fine-tune, redistribute, or build commercial services atop a model, read the license carefully. Some models allow internal use but restrict redistribution; others require attribution or have patent claims.

Practical rules

- Pick models with permissive licenses (e.g., BSD, Apache 2.0) for wide commercial use.

- Retain provenance metadata (which model/version you used) for audits.

- Document training / fine-tuning datasets to defend against claims.

“Freedom without legal clarity is a time bomb. Verify licenses up front.”

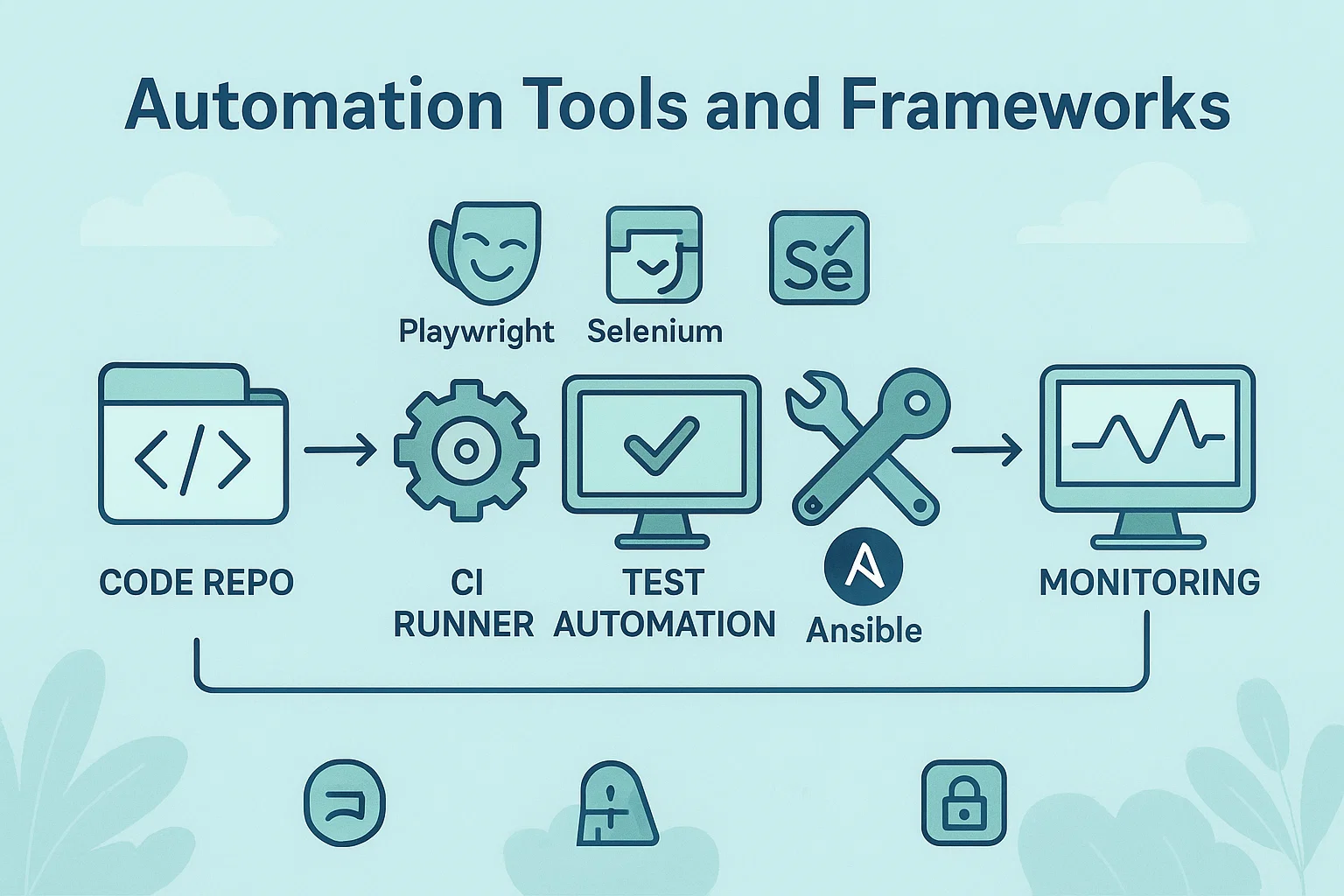

Operational architecture: an example flexible stack

H2: Lightweight, flexible automation ladder

- Event source: webhook, message queue, or scheduler.

- Orchestration: Prefect/Apache Airflow for job flow and retries.

- Worker layer: containerized microservices (Python/Node/Rust) that call local or hosted models.

- Model layer: self-hosted Triton or managed model endpoint with private VPC peering.

- Cache & state: Redis for ephemeral queues, Postgres for transactional state.

- Audit & monitoring: Prometheus + Grafana, and structured logs (e.g., OpenTelemetry).

- Governance: policy engine that blocks risky automations and routes human-approval flows.

This structure gives you the freedom to swap components, scale parts independently, and export the workflow if needed.

Safety & compliance — yes, even with “no restrictions”

Removing vendor constraints doesn’t remove legal and ethical obligations. In fact, when you self-host, you are fully responsible.

Policies to enforce

- Human-in-loop for high-risk actions (payments, moderation, harmful content).

- Logging and audit trails for automated decisions.

- Rate-limits and quotas to avoid runaway automation.

- Data minimization and retention policies to meet privacy rules (GDPR, CCPA).

- Security controls: secrets manager, encrypted storage, and network segmentation.

Governance patterns

- Approval gates: workflows require sign-off for production runs.

- Simulation mode: run automations in dry-run mode and compare outputs before committing.

- Canary releases: roll out automations to a small subset first.

- Explainability: attach reasoning metadata for ML-driven actions.

“Flexibility without governance is chaos. Build the safety rails early.”

Migration plan — go from restricted platform to flexible stack (practical steps)

- Audit current flows: list all automations, data flows, and APIs.

- Identify exportable workflows: start with lower-risk automations (data syncs, notifications).

- Prototype self-hosted equivalent: a single flow with local model serving and orchestration.

- Add observability: logs, dashboards, and alerts from day one.

- Legal review: ensure model licenses and data processes are compliant.

- Gradual cutover & rollback plan: run both systems in parallel and compare outputs.

- Documentation & runbooks: that’s the non-sexy part that saves you in incidents.

Cost realities & performance tradeoffs

- Running GPUs is expensive — expect higher infra costs for large LLMs. But you gain predictability vs per-request API fees.

- Hybrid models: pre-generate heavy content (batch) and use smaller real-time models for interactive UX.

- Autoscaling helps, but capacity planning is still required; plan for peak usage, not average.

Tools / keywords to look for (LSI & variations)

- self-hosted AI automation

- open-source automation frameworks

- developer-first AI APIs

- exportable no-code automation

- model serving (Triton, TorchServe)

- orchestration (Prefect, Airflow)

- data connectors (Airbyte, Singer)

- low vendor lock-in automation

- permissive model license

- agentic automation frameworks

Final thoughts — freedom with responsibility

If your goal is true operational flexibility, pick tools that let you run where you control the infra, export your logic, and maintain clear legal posture. The best “ai automation tools without restrictions” in practice are those that pair developer freedom with governance features: self-hostable models, exportable workflows, developer APIs, and auditability.

“Build for portability, automate with care, and treat freedom as a capability that needs guardrails.”

— closing mantra you can actually use